Portfolio

- TreesAI++ Integrated Climate Resilience Modelling

- Next Generation Modelling System: NAME

- Liverpool City Region High Demand Density

- Rust-Up: Using Rust in Scientific and High Performance Computing Setting

- TreesAI Integrated Climate Resilience Modelling

- Confidential: Database with a Web-Interface

- Big Hypotheses: Improving Performance of Sequential Monte Carlo Method

- CReDo: Climate Resilience Demonstrator

- Ada Lovelace Code: Neutron Reflectometry

- WormRight

- Implicit-Factorisation Preconditioners for NEPTUNE Programme

- Exascale Computing Project

- Mathematics for Competitive Advantage

- Validation and Verification of an AI/ML System

- Parallel I/O for DL_MESO: NetCDF and HDF5

- Enabling UMMAP to Understand GROMACS File Formats

-

TreesAI++ Integrated Climate Resilience Modelling

Developers: Maksims Abaļenkovs, Geoffrey Dawson, Junaid Butt, Katharina Reusch Tech Stack: C, StarPU, OpenMP, MPI, Perl Duration: 10 months Status: completed Integrated Flood Modelling (IFM) lied at the core of running GeoDN workflows. It was the main computation engine simulating the water flow during a flood. IFM was parallelised for shared-memory execution via OpenMP. It provided good performance scaling, but it was limited to a single compute node. This project aimed to interact with large-scale models, i.e. multiple flood scenarios with different grid resolution for various regions (cities) running simultaneously. Hence, distributed memory parallelisation was vital to the project’s success.

My goal was to deliver a distributed memory version of IFM. I chose to apply StarPU runtime. StarPU is based on a novel task-based parallel programming paradigm. This runtime provides automatic scheduling and execution of computationally intensive kernels on CPU, GPU and FPGA in shared and distributed memory modes.

I developed a small prototype code in C imitating computation and communication patterns of IFM. First, I replicated the shared-memory OpenMP functionality in StarPU. Then I upgraded StarPU functions to operate in distributed-memory mode. Initial performance tests confirmed good scaling of the prototype in distributed-memory mode. However, I faced considerable difficulties porting my StarPU ideas from prototype to the real-world IFM code. In the end, the team decided to abandon distributed-memory parallelisation with StarPU due to the lack of time left on the project.

-

Next Generation Modelling System: Numerical Atmospheric-dispersion Modelling Environment (NAME)

Developers: Maksims Abaļenkovs, Andrew Sunderland Tech Stack: Fortran, GNU Make Duration: 11 months Status: ongoing Numerical Atmospheric-dispersion Modelling Environment (NAME) is a Lagrangian particle model which calculates the dispersion of materials released into the atmosphere by tracking model “particles” through the modelled atmosphere. These particles move with the resolved wind described by input meteorology, which can vary in space and time. The particles motion also has a random component to represent the effects of atmospheric turbulence. A consequence of this is that no assumptions need to be made for the shape of the concentration distribution, such as are required in Gaussian plume models.

-

Liverpool City Region High Demand Density

Developers: Maksims Abaļenkovs, Alessandro Raschella Tech Stack: MATLAB Duration: 14 months Status: ongoing This project focuses on creating a series of experimental mobile network setups at various venues in Liverpool. The main aim of the project is to explore Open Radio Access Network (OpenRAN) approach to creating efficient mobile networks. My role in this project lies in identification of potential OpenRAN use-cases that can benefit from AI/ML. Once OpenRAN use-cases are identified I will introduce AI/ML algorithms to optimise mobile networks depending on the workload. Currently I identified three OpenRAN use-cases to take forward

- Use-case 4: QoE optimisation,

- Use-case 5: Traffic steering,

- Use-case 8: QoS based resource optimisation,

- Use-case 21: Energy saving

and started learning, how to apply MATLAB to prototype AI/ML scenarios. Successful ML application needs good domain knowledge and ability to extract quality features from the data. This feature extraction may take a considerable amount of time.

-

Rust-Up: Using Rust in Scientific and High Performance Computing Setting

Developers: Maksims Abaļenkovs, Ciaron Howell Tech Stack: Rust Duration: 6 months Status: completed I learnt Rust in a six-month period allocated by the Hartree Training team. I structured a one-day course into four pairs of lectures and exercises. Together with Ciaron Howell we developed over 200 lecture slides in PowerPoint. I created multiple practical exercises to reinforce learning. There were three to six exercises for each section of the course. Ciaron and I prepared solutions for these exercises. The first course was delivered on April 12, 2024.

-

TreesAI Integrated Climate Resilience Modeling (with IBM Research, Dark Matter Labs, Lucid Minds)

Developers: Maksims Abaļenkovs, Sarah Jackson, Geoffrey Dawson, Junaid Butt, Katharina Reusch Tech Stack: C, OpenMP, MPI, Python, Perl Duration: 7 months Status: completed This was an HNCDI project in collaboration with IBM Research, Dark Matter Labs and Lucid Minds. The main idea of the project consisted in creating a simulation workflow. This workflow predicts, how growing more trees in urban areas can help reduce flooding. The workflow was based around IBM’s climate modelling platform called GeoDN. I worked on the main mathematical engine of this workflow called Integrated Flood Modelling (IFM). I modified the C&nbps;source code to introduce additional command-line options. There were two new things that I added:

- opportunity to skip the infiltration stage and

- ability to profile the code, i.e. measure time taken by each simulation stage.

I created multiple Perl scripts to conveniently launch experiments on Scafell Pike and developed a Docker container with IFM. I ensured the OpenMP environment variables were set correctly to fully exploit the shared-memory parallelism. I experimented with the distributed memory version of IFM, but unfortunately it was not possible to run it. There are considerable differences in input files between the shared- and distributed-memory versions of IFM.

-

Confidential: Database with a Web-Interface (with Cube Thinking)

Developers: Maksims Abaļenkovs, Mark Birmingham Tech Stack: MongoDB, Rust, JavaScript Duration: 6 months Status: completed This is a confidential proof-of-concept project sponsored internally by the STFC. It's main aim is twofold:

- to help UK start-ups apply for funding and

- to help Innovate UK sponsor promising UK companies

I'm a principal investigator on this project. With help of Jason Kingston (Cube Thinking) and Richard Harding (Hartree Centre) I envisioned and designed the entire system. The key technical elements of the system are the database and its web interface. I developed the backend powered by a MongoDB instance running in the cloud. I wrote multiple Rust programs to retrieve the data from various sources, format and store it in the database.

-

Big Hypotheses: Improving Performance of Sequential Monte Carlo Method (with University of Liverpool, Signal Processing Group)

Developers: Maksims Abaļenkovs, Alessandro Varsi Tech Stack: C++, OpenMP, MPI, NVIDIA CUDA, Perl, Stan, Stan Math Library, Intel Advisor, Intel VTune Profiler Duration: 18 months Status: completed Initial ambition of this project was to port computationally expensive phases of Sequential Monte Carlo with Stan (SMCS), an in-house statistical modelling software, to GPUs.

First, I verified the SMCS code hotspots with Intel's Advisor and VTune Profiler. I conducted performance profiling in shared and distributed memory environments. I discovered poor SMCS performance on shared-memory architectures.

Results of profiling parallel SMCS code with Intel's Advisor. There were five experiments with various distributed process (p) and shared-memory thread (t) numbers. These process and thread combinations are shown in the legend. x-axis depicts the SMCS function names and y-axis—total time spent per function. I designed a prototype CUDA code to call log_prob, one of the key Stan Math functions used in SMCS. Unfortunately, numerous experiments with NVIDIA CUDA compiler and its options proved fruitless. It was impossible to link together the core C++ code with a CUDA kernel calling log_prob. This happened due to generous inclusions of Stan Math functions into the final code and defining most of Stan code in *.h header files.

Results of evaluating parallel SMCS code with Intel's VTune Profiler. There were five experiments with various distributed process (p) and shared-memory thread (t) numbers. These process and thread combinations are shown in the legend. x-axis depicts the SMCS function names and y-axis—effective time spent per function. The team decided to take a step back, resolve shared-memory issues and tap into the GPU power via OpenMP offload pragmas. Automatic-differentiation (AD) tapes shown to be the main roadblock preventing shared-memory scaling. Stan developers suggested creating dedicated AD tapes for each OpenMP thread. I programmed a prototype OpenMP tool to intercept thread creation events and instantiate AD tapes. However, this did not resolve performance problems. The new culprits were identified: these are memory allocation calls inside OpenMP parallel regions. Investigation is ongoing.

-

CReDo: Climate Resilience Demonstrator (with ...)

Developers: Maksims Abaļenkovs, Mariel Reyes Salazar, Benjamin Mawdsley, Tim Powell, Benjamin Mummery (Hartree Centre and University of Edinburgh only) Tech Stack: Python, NetworkX, Podman, Docker Duration: 4 months Status: completed ...

-

Ada Lovelace Code: Neutron Reflectometry (with ISIS)

Developers: Maksims Abaļenkovs, Ciaron Howell, Valeria Losasso, Arwel Hughes Tech Stack: Perl, C++, MATLAB, GNU Octave, Apptainer, Docker, Nextflow Duration: 15 months Status: completed Neutron reflectometry provides insight into layered molecular structures (e.g. supported lipid bilayers as models for biological membranes). Resolution of neutron reflectometry studies can be enhanced by integrating scattering length density profiles from molecular dynamics simulations into composite models describing the sample, support and surrounding medium [1].

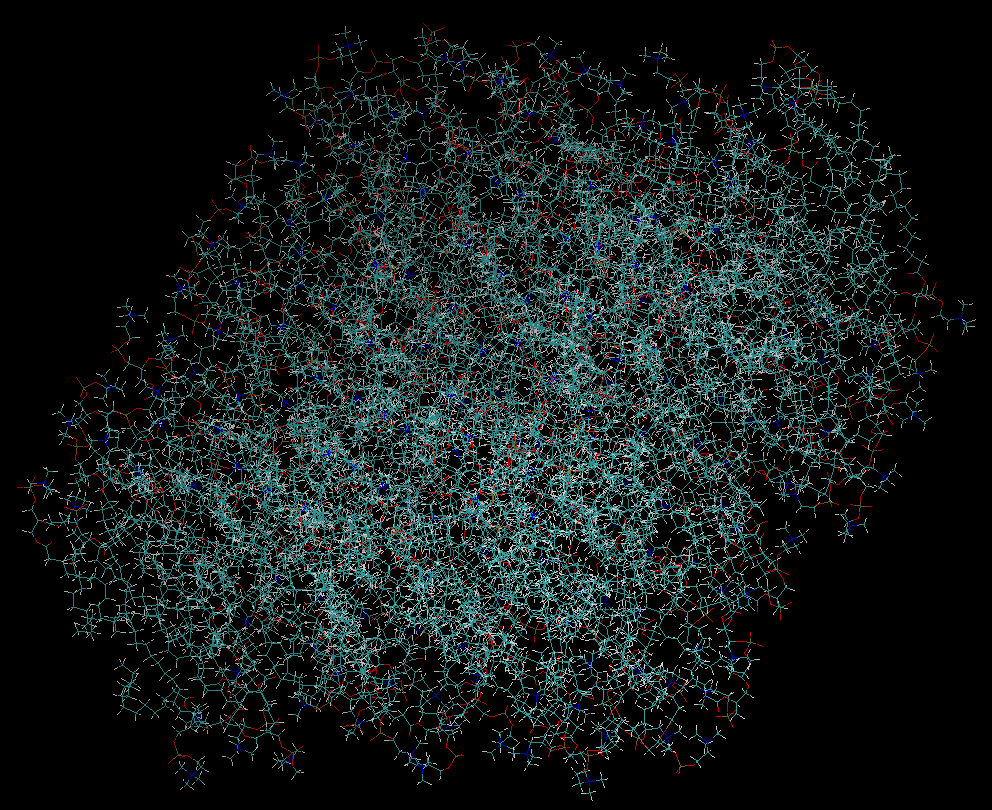

Example of a simulated lipid bilayer with 26,000 atoms. The main goal of this project was to pipeline molecular dynamics simulation with reflectometry analysis software. This workflow consisted of two simulation phases:

- molecular dynamics and

- neutron reflectometry.

To streamline numerous experiments with various options both phases were containerised. I created and tested a CentOS-based Apptainer to setup the core simulation engines—Visual Molecular Dynamics (VMD) and Nanoscale Molecular Dynamics (NAMD). This container included Python, PyBILT, Perl, Charm++, FFTW and Tcl. I also developed a series of Perl scripts to launch molecular dynamics experiments in a quick and convenient manner.

Perl subroutine to modify Slurm scripts. The neutron reflectometry part was developed based on a custom Docker container with MATLAB, its Parallel Computing Toolbox, ParaMonte and an in-house simulation package called Reflectivity Algorithms Toolbox for Rascal (RAT). Initial attempts to embed an Octave interpreter into the autogenerated C++ code failed. Compute-intensive engine of RAT was automatically converted to C++ by MATLAB Coder. Original idea was to run RAT with an autogenerated C++ code from Octave. But differing definitions of mxArray data structure in MATLAB and Octave prevented the use of Octave.

Basic Nextflow scripts were written to execute independent experiment stages in parallel. New containers were passed on to STFC scientists. ISIS researchers uploaded them to Harbor, a local container registry, and made them available for internal use.

-

WormRight (with Royal Agricultural University)

Developer: Maksims Abaļenkovs Tech Stack: Python, TensorFlow 2, LabelImg, Cucumber Duration: 14 months Status: completed The main idea of this proof-of-concept project was to test, if it is possible to develop a machine learning model to detect earthworm casts. This project aimed to help the Royal Agricultural University researchers. Their hypothesis is that earthworm casts are a good indicator of farming land fertility. It is usually hard to whiteness earthworms on the land, but their casts remain there for a number of days.

Labelled earthworm casts. I was a technical leader on the project. Initially I used Cucumber, a behaviour-driven development methodology, to identify the need for a high quality dataset with earthworm casts. Next we developed a protocol for collecting the images. Then I used LabelImg software to label two sets of images with 145 and 827 photographs. Finally, I set up an STFC cloud instance with an NVIDIA Tesla V100 GPU to train a custom object detection model. I experimented with two pre-trained models from TensorFlow 2 Detection Model Zoo: SSD ResNet{50,101,152} V1 FPN 640×640 [2] and EfficientDet D1 640×640 [3]. Both models were trained further on the labelled earthworm cast images. Models used in the experiments relied on Microsoft's Common Objects in Context (COCO) metrics [4].

Mean average precision of SSD ResNet50: x-axis shows the number of model training iterations, while the y-axis denotes the loss value. Depending on the strictness of the Intersection over Union (IoU) threshold, the SSD ResNet50 was able to detect between 3% and 27% of earthworm casts. This project proved that it is possible to train a machine learning model for the detection of earthworm casts. However, the precision and recall values are not high. More work is required to tune the model training for better cast detection.

-

Implicit-Factorisation Preconditioners for NEPTUNE Programme

(with UKAEA)Developers: Maksims Abaļenkovs, Emre Sahin Tech Stack: BOUT++, Nektar++, C++, PETSc, Matrix Market, MATLAB, MPICH, OpenMP Duration: 4 months Status: completed This proof-of-concept project for the UKAEA aimed at testing in-house MCMCMI and MSPAI preconditioners in the numerical simulation packages BOUT++ and Nektar++. At first we identified the key simulation problems of interest to the UKAEA. Then we estimated the possibility of integrating our preconditioners into the software packages. My responsibility in this project lied with the BOUT++ software. Due to the matrix-free nature of the differential equation solvers in BOUT++ direct integration of in-house preconditioners deemed possible, but time-consuming. That's why we decided to approach the problem in two stages:

- export system matrices and the right-handside vectors from BOUT++ and

- import them into MCMCMI and MSPAI for testing.

PETSc solver powering the BOUT++ computation allowed me to export the matrices in the *.mtx Matrix Market format. Performance tests of the MCMCMI and MSPAI preconditioners on a range of system matrices and right-handside vectors were conducted successfully.

-

Exascale Computing Project (ECP)

Developer: Maksims Abaļenkovs Tech Stack: C++, GNU Octave, ParMETIS library, HDF5 library Duration: 6 months Status: completed My role in the project comprised of learning and prototyping multiple mathematical methods: the Markov Chain Monte Carlo Matrix Inversion (MCMCMI) method and the Casimir interactions method, as well as analysing the application of ParMETIS library for direct solvers and developing an example code for reading and writing large sparse matrices in CRS format. These matrices are read and written to hard disk by means of the parallel HDF5 library with compression.

-

Mathematics for Competitive Advantage (MACAD) (A4I with Maxeler)

Developers: Maksims Abaļenkovs, Emre Sahin Tech Stack: C, C++, Perl, GNU Octave Duration: 12 months Status: completed The main goals of this project were:

- adopt custom number formats, alternative to standard IEEE754 single and double precision in order to save memory and speed-up algorithm execution on FPGAs and

- design a general methodology for analysing numerical linear algebra algorithms and efficiently porting them to FPGA hardware.

The target algorithms were QR decomposition and Markov Chain Monte Carlo Matrix Inversion (MCMCMI) method. Classical Householder reflectors’ QR algorithm as well as compact block representations with WY and YTYT were developed in GNU Octave and ported to C and C++ for efficiency. Selected algorithms were value profiled with Maxeler’s profiling library. Possibilities for utilising custom arbitrary float and fixed point offset number formats were detected. Prototype C++ code enabling custom precision datatypes was developed. QR and MCMCMI algorithms in arbitrary float and fixed point offset datatypes (with various number of exponent and mantissa bits as well as total and fractional bit numbers) were tested. Experiments were performed with help of Maxeler library simulating custom number formats on CPUs. Optimal number format for QR was arbitrary float with 6 exponent and 9 mantissa bits. MCMCMI achieved peak performance in a fixed point offset format with 16 bits comprised of 2 integer and 14 fractional bits. Both algorithms were ported to Maxeler’s FPGA cards. Extensive performance tests of QR and MCMCMI on CPU and FPGA architectures were conducted.

Semi-automated workflow for efficient porting of numerical algorithms to FPGAs was proposed. Bisection, golden section and Nelder–Mead–Adamovich algorithms were utilised to detect the optimal number of bits in a pair (exponent and mantissa bits for the arbitrary float or total and fractional bits for the fixed point offset).

Developed source code is open and is publicly available in the MACAD Git repository. Results of this work were published and presented at the International Conference for High Performance Computing, Networking, Storage, and Analysis (SC21) in November 2021.

-

Validation and Verification of an AI/ML System (A4I with KADlytics)

Developers: Maksims Abaļenkovs, Michail Smyrnakis Tech Stack: Python, C, OpenMP, Jupyter notebooks, NetworkX, NumPy modules Duration: 12 months Status: completed During this project we created a unique scientifically proven methodology for validation and verification of an AI system. The major difficulty hindering classical evaluation of the system was the lack of ground truth data. The developed methodology operates with the minimum (or no) ground truth data and applies to any AI/ML system that can be represented as a graph. Project repository contains Python and C source code to conduct the analysis, multiple Jupyter notebooks explaining the key concepts used in this evaluation as well as in-depth technical reports. A follow-up research paper is in preparation.

-

Parallel I/O for DL_MESO: NetCDF and HDF5 (IROR)

Developers: Maksims Abaļenkovs, Chris Dearden Tech Stack: Fortran, MPICH, NetCDF, HDF5 libraries Duration: 6 months Status: completed In this project we worked on an in-house computational chemistry suite called DL_MESO, DPD. We enhanced parallel output capabilities of DPD. The software was extended to organise and output simulation results in scientific data formats such as NetCDF and HDF5. Performance results on Scafell Pike show sixfold reduction in output file size and twofold reduction in average memory (RAM) utilisation. Results were presented to Michael Seaton, the main developer of DL_MESO, and integrated into the software. Auxiliary Git repository sci-file-io with multiple examples on creating, reading and writing sequential and parallel *.h5 and *.nc files was created in the process.

-

Enabling UMMAP to Understand GROMACS File Formats (IROR)

Developers: Maksims Abaļenkovs, Chris Dearden Tech Stack: C, xdrfile library Duration: 3 months Status: completed UMMAP is the Hartree Centre’s in-house software tool for post-processing results of computational chemistry simulators. In this project we utilised GROMACS xdrfile library to extend UMMAP functionality for reading XTC and TRR file formats. Git repository sci-file-io with *.xtc and *.trr file readers was created. After a series of tests the XTC and TRR reading functionality was integrated into UMMAP.

References

- L. A. Clifton, S. C. L. Hall, N. Mahmoudi, T. J. Knowles, F. Heinrich, and J. H. Lakey, “Structural investigations of protein–lipid complexes using neutron scattering,” Lipid-Protein Interactions: Methods and Protocols, pp. 201–251, 2019.

- Jung, Heechul, Choi, Min-Kook, Jung, Jihun, Lee, Jin-Hee, Kwon, Soon, Y. Jung, and Woo, “ResNet-Based Vehicle Classification and Localization in Traffic Surveillance Systems,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, 2017.

- M. Tan, R. Pang, and Q. V. Le, “EfficientDet: Scalable and Efficient Object Detection,” in Proc. Computer Vision and Pattern Recognition, 2020 [Online]. Available at: https://arxiv.org/abs/1911.09070

- T.-Y. Lin, M. Maire, S. Belongie, L. Bourdev, R. Girshick, J. Hays, P. Perona, D. Ramanan, C. L. Zitnick, and P. Dollár, “Microsoft COCO: Common Objects in Context,” Proc. Computer Vision and Pattern Recognition, 2015 [Online]. Available at: https://arxiv.org/abs/1405.0312